Computers in pathology: a long history

Adrien Foucart, PhD in biomedical engineering.

This website is guaranteed 100% human-written, ad-free and tracker-free. Follow updates using the RSS Feed or by following me on Mastodon

Adrien Foucart, PhD in biomedical engineering.

This website is guaranteed 100% human-written, ad-free and tracker-free. Follow updates using the RSS Feed or by following me on Mastodon

We (...) have an unusual situation that we wish to search for objects present in low concentration, the quantity of which is irrelevant, and the final classification of which is not agreed by different experts. At this point the engineer may well begin to feel despair. (A.I. Spriggs, 1969 [1])

This quote comes from a 1969 publication entitled "Automatic scanning for cervical smears". This paper is interesting for several reasons:

Specifically, Spriggs worries that the "Carcinoma in situ" which they are trying to detect "is not a clear-cut entity", with "a whole spectrum of changes" where "nobody knows where to draw the line and when to call the lesion definitely precancerous". To sum up: "We therefore do not really know which cases we wish to find". Moreover, "the opinions of different observers also vary".

On that latter point, Spriggs also notes that the classifications or grading used by pathologists are often more "degrees of confidence felt by the observer" than measurable properties of the cells. "It is therefore nonsensical to specify for a machine that it should identify these classes." The only reason to do it is that we have to measure the performance of the machine against the opinion of the expert. This is slightly less of a problem today, as the grading systems have evolved and become more focused on quantifiable measurements, but they still often allow for large margins of interpretation. As we match our systems against these grading, we constrain them to "mimic" the reasoning of the pathologists instead of focusing on the underlying problem of finding relationships between the images and the evolution of the disease itself.

"Digital pathology" really started to appear in the scientific literature around the year 2000. At that time, the focus was on telepathology or virtual microscopy: allowing pathologists to move away from the microscope, to more easily share images for second opinions, and to better integrate the image information with the rest of the patient's record [2, 3, 4].

Digital pathology also relates to image analysis. The 2014 Springer book "Digital Pathology" [5], for instance, includes in their definition not only the acquisition of the specimens "in digital form", but also "their real-time evaluation", "data mining", and the "development of artificial intelligence tools".

The terminology may be recent, but the core idea behind it (linking an acquisition device to a computer to automate the analysis of a pathology sample) is about as old as computers. One of the earliest documented attempts may be the Cytoanalyzer... in the 1950s.

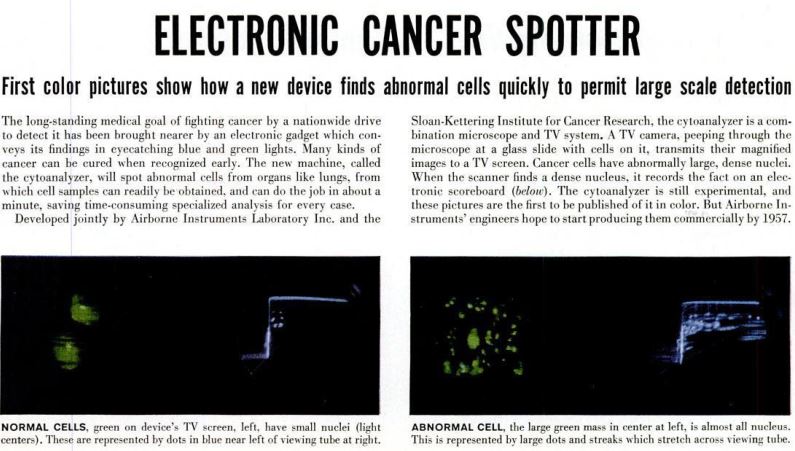

Two paragraphs in a 1955 issue of LIFE magazine, sandwiched between ads for mattresses and cars, presents an "electronic gadget" which "will spot abnormal cells (...) in about a minute, saving time-consuming specialized analysis for every case". A more comprehensive description of the prototype can be found by Walter E. Tolles in the Transactions of the New York Academy of Science [6].

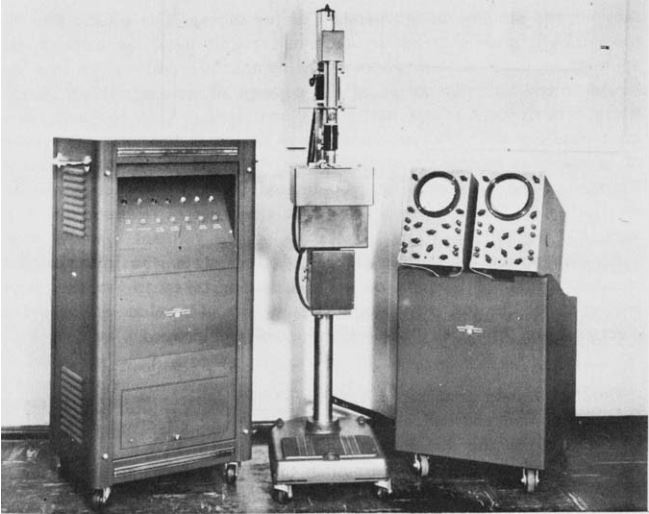

The Cytoanalyzer (see Fig. 2) had three units: the power supply and computer (left), the scanner (middle) and the oscilloscopes for monitoring and presentation (right). The scanner converts the "density field" of the slide into an electric current, which is used to analyse the properties of the cells and discriminate between normal and abnormal ones.

Two clinical trials of the Cytoanalyer were conducted, in 1958-59 and in 1959-60. An analysis of the results by Spencer & Bostrom in 1962 [7] considered its results to be "inadequate for practical application of the instrument".

Decades passed. Hardware and software vastly improved, yet even as new methods in image analysis and artificial intelligence got better at solving tasks related to pathology, they still fell short of the strict requirements of an automated system for diagnosis. In 2014, Hamilton et al. were writing that "even the most state of the art AI systems failed to significantly change practice in pathology" [8]. The problem is that medical diagnosis is generally made by integrating information from multiple sources: images from different modalities, expression of the symptoms by the patient, records of their medical history... AI systems can be very successful at relatively simpler sub-tasks (finding nuclei, delineating glands, grading morphological patterns in a region...), but they are just unable, at this point, to get the "big picture". Not to mention, of course, all the thorny obstacles to widespread adoption: trust in the system, regulatory issues, insurance and liability issues, etc., etc.

More than 65 years after the Cytoanalyzer, routine use of AI in clinical practice for pathology appears to be very close... but we're still not there yet. The performance of deep learning algorithms, combined with the widespread use of whole-slide scanners producing high-resolution digital slides, make the field of computer-assisted histopathology a very active and optimistic one at the moment. Still, even with the excellent results of Google Health's breast cancer screening system in clinical studies [9], it's not clear that automated systems are ready for real practice.

The difficulties of our algorithms are in large part the same that were identified by Spriggs in 1969: it is difficult or impossible to get an "objective" assessment of pathological slides and, even with modern grading systems, inter-expert disagreement is high. This makes training and evaluating algorithms more difficult, and when dealing with a subject as sensitive as healthcare, any result short of near perfection will have a hard time getting adopted by the medical community... and by the patients.