---- 4.1 Definitions

------ 4.1.1 Evaluation process

------ 4.1.2 Detection metrics

------ 4.1.3 Classification metrics

------ 4.1.4 Segmentation metrics

------ 4.1.5 Metrics for combined tasks

------ 4.1.6 Aggregation methods

---- 4.2 Metrics in digital pathology challenges

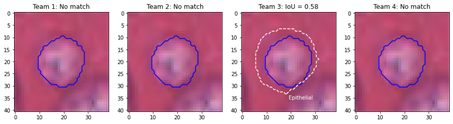

------ 4.2.1 Detection tasks

------ 4.2.2 Classification challenges

------ 4.2.3 Segmentation challenges

---- 4.3 State-of-the-art of the analyses of metrics

------ 4.3.1 Relations between classification and detection metrics

------ 4.3.2 Interpretation and problems of Cohen's kappa

------ 4.3.3 Imbalanced datasets in classification tasks

------ 4.3.4 Statistical tests

---- 4.4 Experiments and original analyses

------ 4.4.1 Foreground-background imbalance in detection metrics

------ 4.4.2 Imbalanced datasets in classification tasks

------ 4.4.3 Limit between detection and classification

------ 4.4.4 Use of detection metrics for instance classification

------ 4.4.5 Agreement between classification metrics

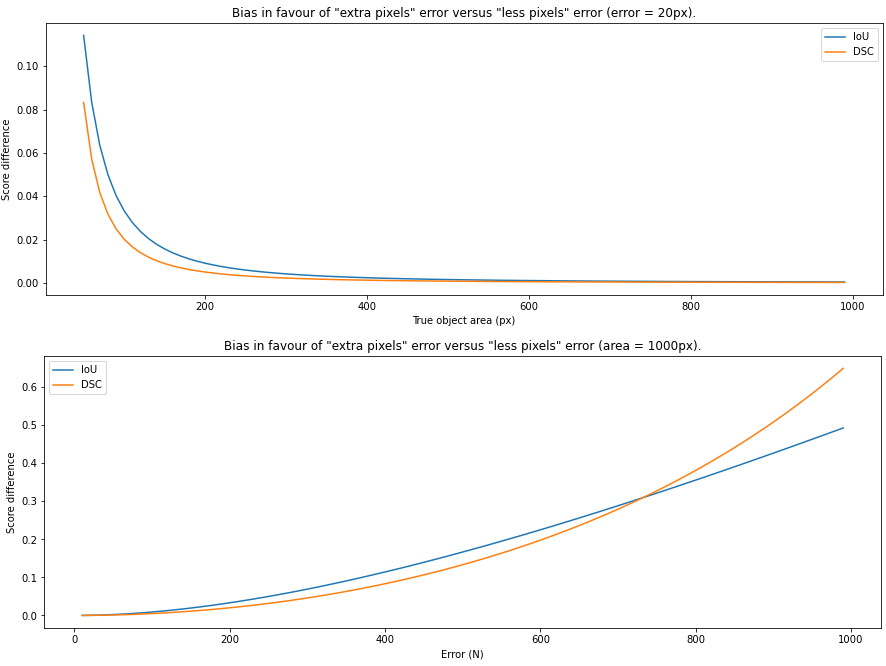

------ 4.4.6 Biases of segmentation metrics

------ 4.4.7 Multi-metrics aggregation: study of the GlaS 2015 results

------ 4.4.8 Why panoptic quality should be avoided for nuclei instance segmentation and classification

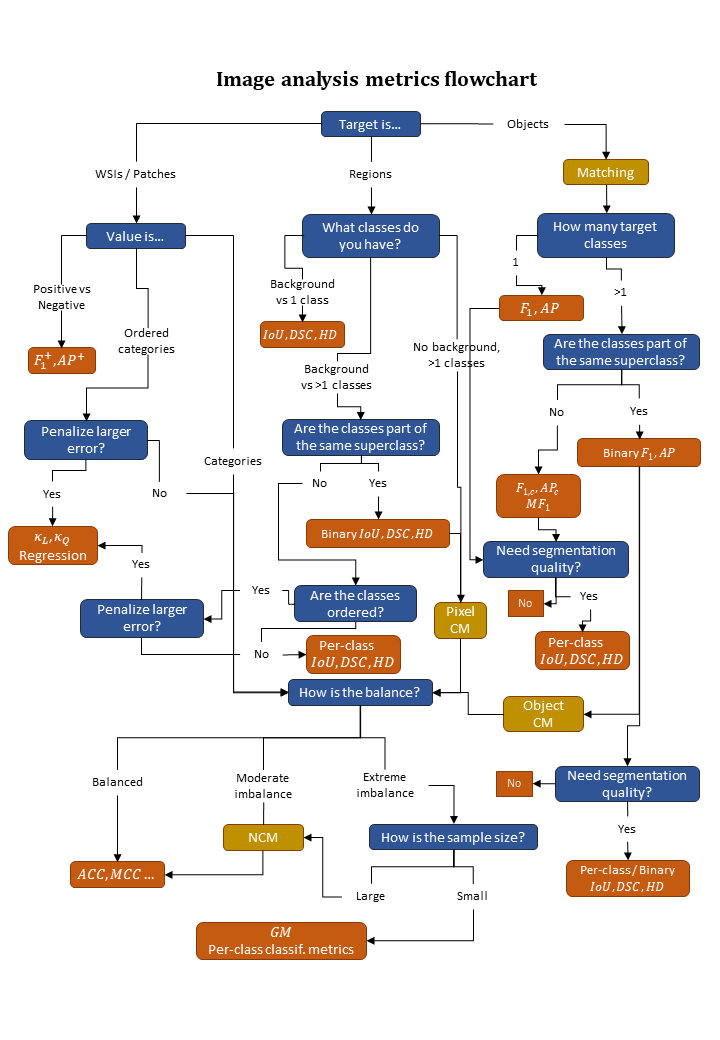

---- 4.5 Recommendations for the evaluation digital pathology image analysis tasks

------ 4.5.1 Choice of metric(s)

------ 4.5.2 Using simulations to provide context for the results

------ 4.5.3 Disentangled metrics

------ 4.5.4 Statistical testing

------ 4.5.5 Alternatives to ranking

---- 4.6 Conclusion

4. Evaluation metrics and processes

In challenges or in any publication that aims to determine if a particular method improves on the state-of-the-art for a particular task, the question of how to evaluate the methods is extremely important. At the core of any evaluation process, there is one or several evaluation metrics. Different metrics have been proposed and have achieved a more-or-less standard status for all types of tasks, and many studies have been made to examine their behaviour in different circumstances. Moreover, the evaluation process is not limited to the metric itself.

In this chapter, we will start in section 4.1 by providing the necessary definitions for the description of the evaluation process of digital pathology image analysis tasks, and of the common (and less common) evaluation metrics used for detection, classification, and segmentation tasks, as well as for more complex tasks that combine these different aspects. The use of these metrics in digital pathology challenges will then be discussed in section 4.2, and the state-of-the-art of the analysis of their biases and limitations in section 4.3.

We will then present our additional analyses and experiments in section 4.4, and provide recommendations on the choices that can be made when determining the evaluation process for a digital pathology task in section 4.5.

4.1 Definitions

Most of the formal definitions of the metrics used in this section are adapted from several publications and reworked to use the same mathematical conventions. For the detection metrics, we largely rely on the works of Padilla et al. [38], [39]. Most of the classification metrics can be found in Luque et al. [32], while the definitions for the segmentation metrics are given in Reinke et al. [40]. The evaluation process in general described here is largely based on our analysis of the processes described in all the digital pathology challenges referenced in section 4.2.

4.1.1 Evaluation process

The evaluation of an algorithm in an image analysis task starts from a set of “target” samples (usually from expert annotations and considered to be the “ground truth”), associated to a set of predictions from the algorithm. In the final evaluation of an algorithm, the samples would come from the “test set” extracted from the overall dataset, which should be as independent as possible from the training and validation set. The exact nature of the samples will depend on the task.

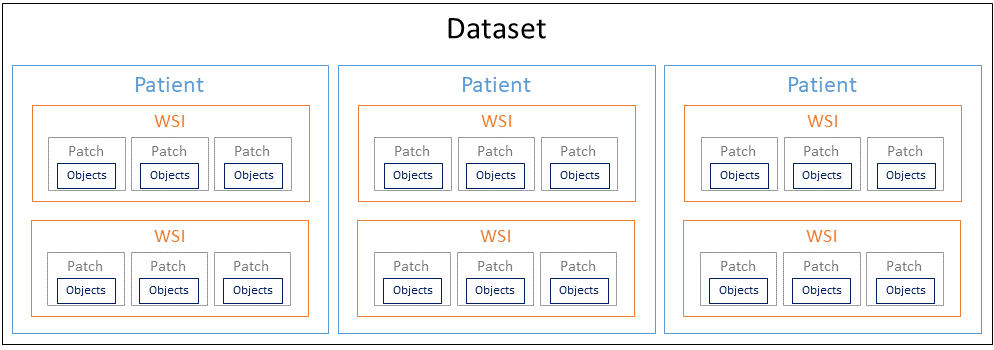

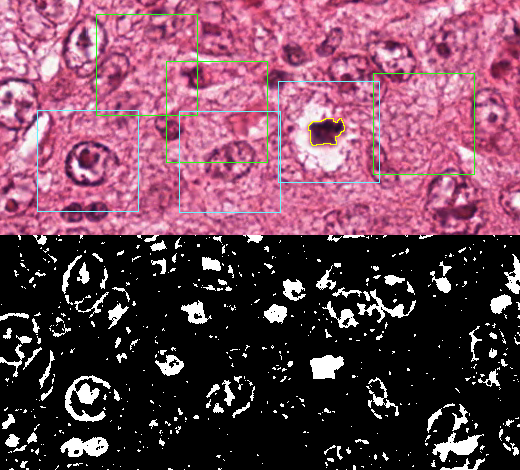

Digital pathology datasets have a hierarchical nature, with the top-level being the patient. From each patient, different whole-slide images (WSI) may have been acquired. From each of those WSIs, different image patches may have been extracted, and often each of these patches will contain a set of individual objects of interest (as illustrated in Figure 4.1).

In the DigestPath 2019 challenge1, for instance, two different datasets are described. The “signet ring cell dataset” contains objects (the signet ring cell) in 2000x2000px image patches extracted from WSIs of H&E stained slides of individual patients. In the “colonoscopy tissue segment dataset,” however, WSIs have been directly annotated at the pixel-level, so there are no “patch-level” or “object-level” items.

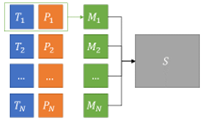

A metric is thus defined as a function that evaluates a pair of “target” (ground truth) and “predicted” items (e.g. object localization, patient diagnostic class…). Most metrics will produce outputs either in the or the range, but there are exceptions (such as distance-based metrics, which are typically in ). The aggregation process, on the other hand, describes how the set of metric values at a given level is reduced to a single score at a higher level (see Figure 4.2).

With these definitions in mind, we can now turn our attention to the three main “task types” that we previously identified in Chapter 1, as well as their combinations.

In a detection task, there will typically be an object-level annotation. To evaluate such a task, the first step is therefore to find the matching pairs of objects in the images. The matching criteria are therefore an important factor in the evaluation process. They typically involve a matching threshold, for instance based on the centroid distance or the surface overlap. Once the matching pairs of objects have been found, there are two separate aspects of the results that can be evaluated. First, a partial confusion matrix can be built:

With TP the “True Positives” (number of matching pairs), FN the “False Negatives” (number of un-matched ground truth objects), FP the “False Positives” (number of un-matched predicted objects), and is the cardinality of a set. It should be noted that, at the object-level, there are no countable “True Negatives,” which will limit the available metrics for evaluating the detection score [38].

The other possible aspect of the evaluation is the quality of the matches. This can take different forms depending on what exactly the target output was: bounding box overlap, centroid distance, etc.

In a classification task, the annotation could be at the object level (instance classification), at the pixel level (semantic segmentation), at both levels (instance segmentation and classification), or at any of the “higher” levels in Figure 4.1: patch, WSI, or even patient. The latter cases correspond to “pure” classification tasks, whereas the others are combining classification with detection and/or segmentation. The classification part of the problem can typically be summed up with a confusion matrix, built from pairs of where and is the number of classes in the problem, and with a vector of class probabilities so that . The confusion matrix will therefore be a matrix with .

The distinction between “classification” tasks and “detection” tasks can sometimes be difficult to make. In many digital pathology applications, there will be one “meaningful” class (for instance: nuclei, tumour, etc.), and a “no class” category that includes “all the rest.” In those cases, there will be clear “positive” and “negative” categories, and the confusion matrix will include the TP, FP, FN (and, in this case, countable TNs) of the detection tasks. As we will see further in this chapter, this can be a source of confusion in the definition of the metrics, as some metrics, such as the F1-Score, take a different form if a single “positive” class is considered (as in a detection task) or if two or more classes are considered on equal grounds.

The combination of “detection” and “classification” is instance classification, which can also be seen as multi-class detection. In such problems, the results can be described in a confusion matrix that includes multiple classes and a background or no-instance category. The confusion matrix for an m-class problem would therefore look like:

Where the first line corresponds to falsely positive detections (i.e. detections for any of the classes that correspond to no ground truth object) and the first column to falsely negative detections (i.e. ground truth objects that have no predictions). As in the previously described detection confusion matrix, the top-left corner is uncountable and corresponds to the true negative detections.

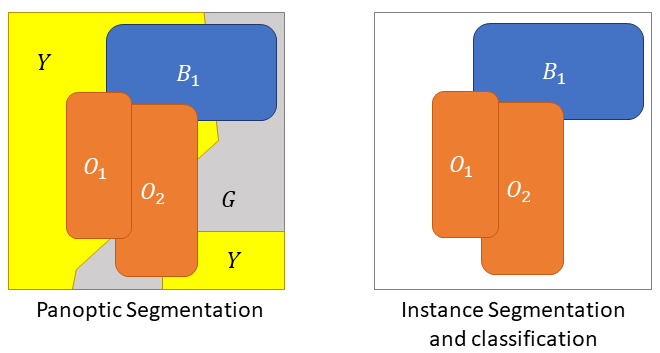

In segmentation tasks, the annotations will be at the pixel-level. These annotations can be simply binary (an “annotation mask”), or also contain class labels (“class map”) and/or instance labels (“instance map”). We will therefore have and , with the pixel position. In an instance segmentation case, there will once again need to be a matching criterion to find the matching subsets and , so that the evaluation can be reduced to several binary “object-level” annotation masks. In semantic segmentation, there is no matching step necessary. Instead, a segmentation metric will often simply be computed per-class, then an average score can be computed. The most common evaluation metrics can be defined from pixel-level confusion matrices, or as distance metrics that compare the contours and/or the centroids of the binary masks.

When all three tasks are combined together, we get instance segmentation and classification. In such problems, each pixel can be associated both to a class and to an instance label. The evaluation of such problems can be quite difficult, as will be discussed through this chapter.

4.1.2 Detection metrics

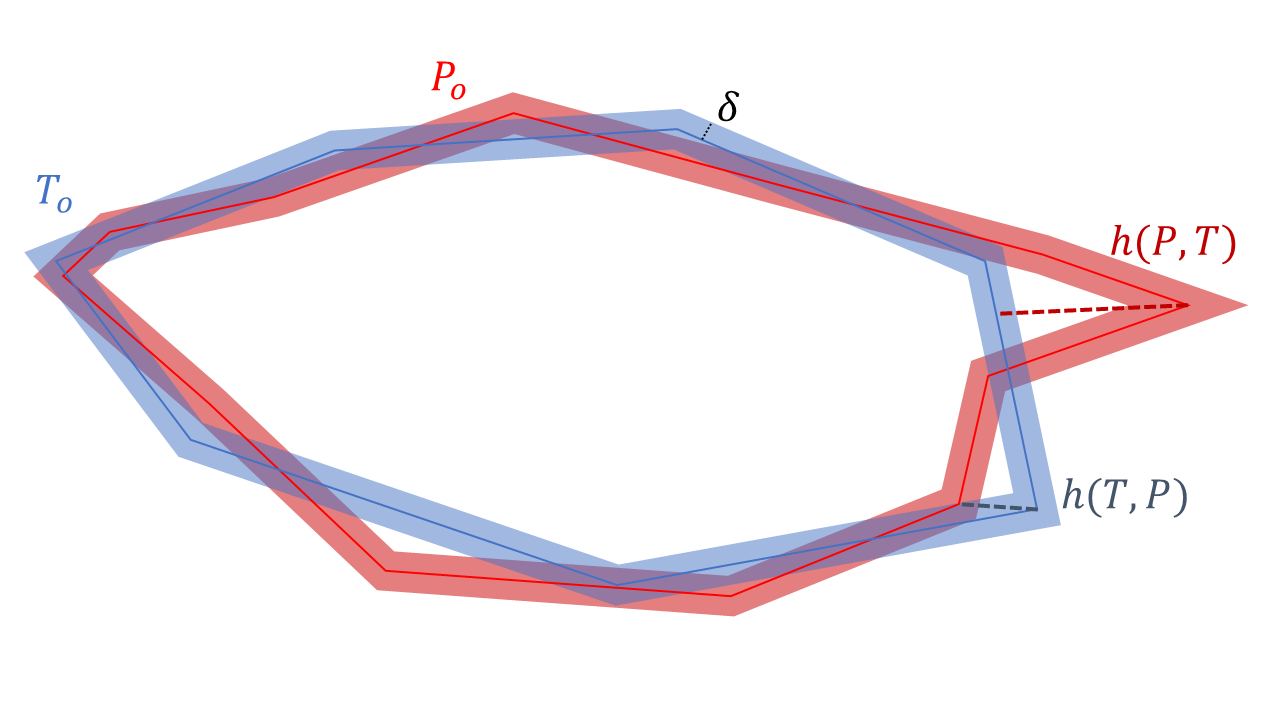

As mentioned above, detection tasks typically require a matching step before the evaluation, often involving a matching threshold . The matching step takes the sets of Target objects and Predicted objects and outputs three sets: the true positives, the un-matched false negatives, and the un-matched false positives.

These sets are related to a confidence threshold on the detection probability (which is the raw output of the detection algorithm), that determines if a candidate region is considered as a detected object or not: , with the detection probability for the object [38], with usually set to 0.5.

Higher confidence thresholds will yield a smaller set of , which means that the following relationships are always verified:

Detection metrics will therefore be either “fixed-threshold” metrics, bound to a particular choice for the matching and confidence thresholds, or “varying-threshold,” capturing the evolution of the metric as the thresholds are moved.

4.1.2.1 Object Matching

In most cases, object detection outputs include some sort of localisation, which gives an indication of where is the object in the image, and what is its size. In the most precise case, we have the full segmentation of the object, and are therefore in an “instance segmentation” problem. More often, the localisation will be given in the form of a bounding box with a centroid. In the extreme opposite case, there is no localisation at all, and simply an indication of whether an object is present in the image or not. There is then no “matching” step, and the problem is formulated as a binary classification problem, with the “no object” and “object” class being determined at the image patch level.

The matching step generally is “overlap-based” and/or “distance-based.”

Given a pair of target and predicted objects and , represented as a set of pixels belonging to this object (which may come either from bounding boxes or per-pixel instance masks), overlap-based methods will look at the intersection area () and the union area () of the two objects. These two measures can be combined in the intersection over union (IoU), also known as the Jaccard Index [24]:

A common criterion is to apply a threshold on this IoU, so that a pair of objects is “a match” if , with often set at 0.5 or 0.75 [39]. If , it is possible for multiple target objects to be matched to the same predicted objects (and vice-versa), in which case a “maximum IoU” criterion is typically added to the rule (so that the match is the predicted object with the maximum IoU among those that satisfy the threshold condition). In that case, we will therefore have: where all three following conditions are respected:

The maximum IoU can also be used as a single criterion, with no threshold attached, in which case any overlap may be detected as a match (this is equivalent to setting ).

Another strategy to determine a match relates to the distance between the centroids of the objects. Similarly to the IoU criterion, this will imply a “closest distance” rule, which may be associated with a “maximum distance threshold.” The criteria will therefore be:

With usually defined as the Euclidian distance between the centroids of and .

The same rule can be adapted to other distance definitions, such as for instance the Hausdorff’s distance (defined in the segmentation metrics below) between the contours of the objects.

4.1.2.2 Fixed-threshold metrics

Fixed-threshold metrics are computed for a specific value of the matching threshold (commonly, ) and of the confidence threshold (also usually ), which determine the sets TP, FP and FN.

The precision (PRE) of the detection algorithm is the proportion of “positive detections” that are correct, and is defined as:

The recall (REC) is the proportion of “positive target” that have been correctly predicted, and is defined as:

The F1-score (F1) is the harmonic mean of the two:

4.1.2.3 Varying-threshold metrics

To better characterize the robustness of an algorithm’s prediction, it is sometimes useful to look at how the performance evolves with different values of the confidence and/or matching thresholds.

Decreasing the confidence threshold means being less restrictive on what’s considered a “prediction,” and therefore to more true positives, more false positives and less false negatives. There is thus a monotonic relationship between the confidence threshold and the recall. The relationship between the precision and the confidence threshold, however, is less predictable.

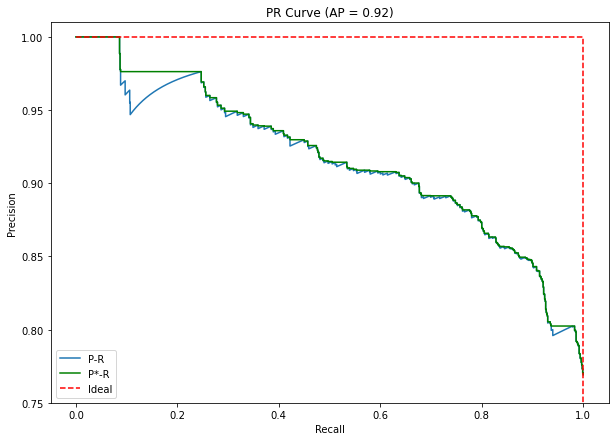

This dynamic relationship between PRE, REC and the confidence threshold can be captured in a “precision-recall curve” (PR curve). An example of a PR curve is shown in Figure 4.3, constructed from synthetic data. As we can see, while the overall trend is for the precision to decrease as the recall increases, the relationship is not monotonic, and the precision has a characteristic “zig-zag” pattern.

To summarize the performance shown by the PR curve, the “area under the PR curve” (AUPRC) can be computed. An ideal detector would have . A very common approximation of the AUPRC, that is less sensitive to the small oscillations of the precision, is the “Average Precision” (AP). Adapting the formulation from Luque et al. [39], we can express the PR curve as a function , and the AP is obtained by:

- Interpolating the PR curve so that , thus removing the zigzag pattern by replacing each point by the “highest value to its right” (see green line in Figure 4.3).

- Sampling with equally spaced recall values, so that

The AP is therefore a varying-threshold metric for the confidence threshold, but a fixed-threshold metric for the matching rule. To also take into account this matching rule variability, the AP can be averaged over different matching threshold values. As an example, in the NuCLS 2021 challenge [3], one of the metrics used to evaluate the detection score is the mean AP using an IoU threshold varying between 0.5 and 0.95, by step of 0.05. If we set to mean the AP using the matches found with , then we have:

4.1.3 Classification metrics

The results of a classification problem with categories are summarized in a confusion matrix. We will use here the convention that the rows of that matrix correspond to the “ground truth” class, while the columns correspond to the “predicted” class, and corresponds to the number of examples with “true class” and “predicted class” . It should be noted, however, that some of the classification metrics may be used to simply characterize the agreement between two sets of observations, without one being assumed to be the “truth.” To be useful for that latter purpose, a metric needs to be observer invariant. Practically, this is the case if (or, from the sets of ground truth and predicted observations : ).

Another characteristic of classification metrics is that they can be class-specific or global. A class-specific metric will evaluate class as a “positive” class against all others, while global metrics aggregate the class-specific values.

Metrics can also assume an order to the classes or, on the contrary, that they are purely categorical. In the former case, switching the classes in the confusion matrix would lead to a different result, while categorical metrics are class-switch invariant.

Finally, another aspect (that we more thoroughly examine in sections 4.3 and 4.4) is the class imbalance bias that many metrics exhibit. This means that changing the class balance of the dataset (e.g. by changing the sampling method) alters the results.

4.1.3.1 Class-specific metrics

Several per-class metrics can be defined. These use the notions of the True Positives (TP), True Negatives (TN), False Positives (FP) and False Negatives (FN). Using our general notation for the confusion matrix, we can have for class :

An example on a 5 classes problem is shown in Table 4.1.

| T \ P | |||||

|---|---|---|---|---|---|

The sensitivity (SEN), also called recall (REC) or True Positive Rate (TPR) measures the proportion of “True Positives” among all the samples that are “positive” in the Target (i.e. that truly belong to the class ):

The specificity (SPE), or True Negative Rate (TNR) is conversely the proportion of TN in the negative Target samples:

The precision (PRE) or Positive Predictive Value (PPV) is the proportion of TP in the positive Predicted samples:

The Negative Predictive Value (NPV) is similarly the proportion of TN in the negative Predicted samples:

The False Positive Rate (FPR) is the proportion of FP in the negative Target samples:

The F1-Score is better defined as a detection metric (see section 4.1.2), but it is often used in classification problems as well.

The per-class F1-Score () is defined as the harmonic mean of the precision and sensitivity:

The per-class Geometric Mean () of the SEN and SPE is also sometimes used [32]:

4.1.3.2 Global metrics

To get a global classification score that aggregates the results from all classes, several metrics are possible.

The simplest performance measure from the confusion matrix is the accuracy (ACC), which is given by the sum of the elements on the diagonal (the “correct” samples) divided by the total number of samples :

It is also possible to extend the F1-Score so that it becomes a global metric. The macro-averaged F1-Score has been defined in two different ways in the literature [42].

First, as a simple average of the per-class F1-scores. We call this version the simple-averaged F1-Score ():

The other definition first computes the average of the per-class PRE and SEN, before computing the harmonic mean. We will call it the harmonic-averaged F1-Score ():

With:

Meanwhile, the micro-averaged F1-Score () will first aggregate the TP, FP and FN before computing the micro-precision and micro-recall, and finally the F1-Score. It has been used in challenges such as Gleason 2019, but it should be noted that it is equivalent to the accuracy:

Like the F1-Score, the can also be extended as a global metric. It will then be the Geometric Mean (GM) of the per-class :

The GM has zero bias due to class imbalance [32]. It is, however, not observer invariant.

The Matthews Correlation Coefficient (MCC), often called in the multiclass case (and sometimes phi coefficient), uses all the terms of the CM and is defined as:

It is bound between -1 and 1, where 0 means that the target and prediction sets are completely uncorrelated.

Cohen’s kappa is a measure of the agreement between two sets of observations and can be used as a classification metrics to rate the agreement between the target and the predictions. It measures the difference between the observed agreement and the agreement expected by chance , and is defined as [8]:

So that a “perfect agreement” () leads to , a perfect disagreement () to a negative (the exact minimum value depends on the distribution of the data). From the confusion matrix, and are defined as:

Cohen’s kappa is also often used for ordered categories, where errors between classes which are “close” together are less penalised.

Let be a weight matrix ( should verify , and ).

We can define the matrix of expected observations from random chance with:

From that, we define Cohen’s kappa generally with:

The three most common formulations of the weights are:

Unweighted kappa : (using the Kronecker delta), which resolves to the same value as the original definition of the shown above.

Linear weighted kappa :

Quadratic weighted kappa :

Cohen’s kappa is bound between -1 and 1, with 0 corresponding to as much agreement as expected by random chance.

4.1.3.3 Varying-threshold metrics

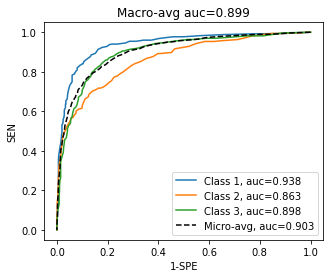

All the classification metrics described thus far are “no threshold” metrics, where the predicted class of a sample is simply taken as the class which has the maximum predicted probability. It can however also be interesting to take into account the “confidence” of a model with regards to its predictions. This information can be captured in a “Receiver Operating Characterstic,” or ROC Curve. Where the detection PR-curve plotted the PRE and REC for varying confidence thresholds, the ROC curve plots the SEN (=REC) and the FPR (=1-SPE).

As the SEN and SPE are “per-class” metrics, so is the ROC a “per-class” visualisation, where the “varying threshold” is used in a “one class vs all” manner. For a class , a threshold , and the set of predicted class probabilities vectors where is the probability that sample has the class , we can define:

From which and can be computed.

To summarize the information contained in the ROC curve, the Area Under the ROC () can be computed for a class, so that an “ideal classifier” for that class would have an of 1. As for the F1-Score, we can also define micro-averaged and macro-averaged summaries of the .

The macro-average is defined as:

While the micro-averaged is defined by first summing the TP, FP, TN and FN:

, etc.

Then computing the resulting , , and finally the from those micro-averaged values. An example on synthetic data is presented in Figure 4.4.

A summary of the main characteristics of the metrics discussed here can be found in Table 4.2.

| Metric | Range of values | Class-specific or global | Observer invariant | Class imbalance bias | Ordered |

|---|---|---|---|---|---|

| [0, 1] | Class-specific | No | No | No | |

| [0, 1] | Class-specific | No | No | No | |

| [0, 1] | Class-specific | No | High | No | |

| [0, 1] | Class-specific | No | High | No | |

| [0, 1] | Class-specific | Yes | High | No | |

| [0, 1] | Class-specific | No | No | No | |

| [0, 1] | Global | Yes | Medium | No | |

| [0, 1] | Global | Yes | Low | No | |

| [0, 1] | Global | Yes | Medium | No | |

| [-1, 1] | Global | Yes | Medium | No | |

| [-1, 1] | Global | Yes | High | No | |

| [-1, 1] | Global | Yes | High | Yes | |

| [0, 1] | Global | No | No | No | |

| [0, 1] | Class-specific | No | No | No | |

| [0, 1] | Global | No | No | No | |

| [0, 1] | Global | No | No | No |

4.1.4 Segmentation metrics

We consider in this section pure “segmentation” tasks that separate a foreground region from a background region. Instance and semantic segmentation will be considered in the “combined metrics” section. Segmentation metrics therefore compare two binary masks in an image. Let be the set of pixels that belong to the target (“ground truth”) mask, and the set of pixels that belong to the predicted mask. There are two main categories of segmentation metrics: those that measure the overlap between the two sets, and those that measure a distance between the contours of the and masks, labelled and respectively.

In the following definitions, is the cardinality of the set and indicates all the elements that are not in the set.

4.1.4.1 Overlap metrics

The overlap metrics can also be defined using a “confusion matrix” based on the binary segmentation masks and (we use here boldface to denote the mask matrices instead of the sets), where for all , otherwise. The TP, FP, FN and TN are then defined as:

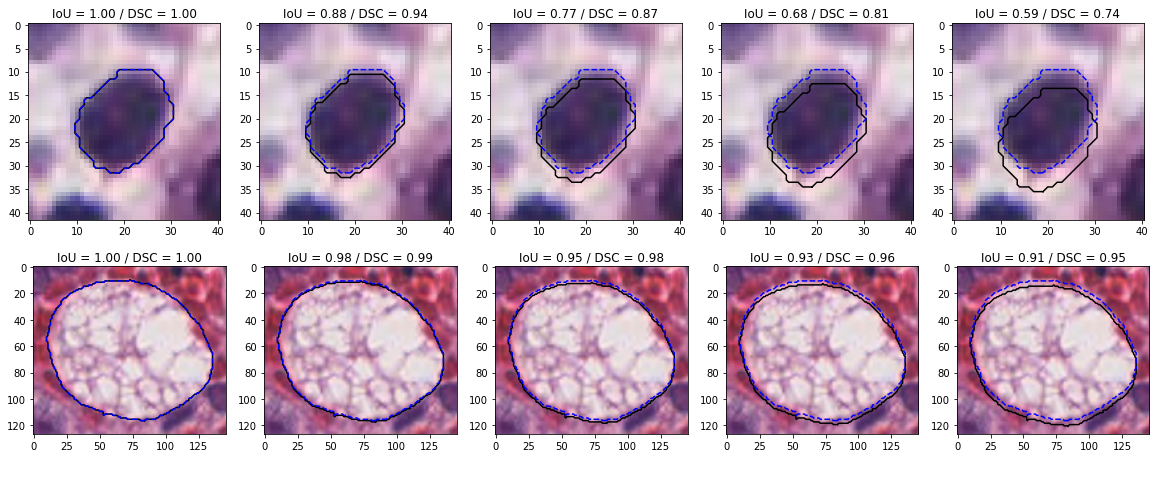

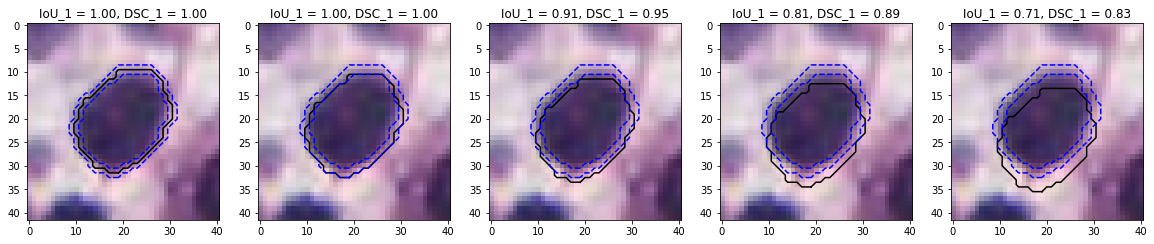

The two most commonly used overlap metrics are the Dice Similarity Coefficient (DSC) and the Intersection over Union (IoU), while the most common distance-based metric is Hausdorff’s Distance (HD). The IoU, DSC and HD are illustrated in Figure 4.5.

![Figure 4.5. Illustration of the three “basic” segmentation metrics. Adapted from our review [15].](./fig/4-5.png)

The IoU is defined as:

It is bounded between 0 (no overlap) and 1 (perfect overlap).

Using the confusion matrix notation, it can also be defined as:

The DSC is defined as:

It is also bounded between 0 and 1, and heavily correlated to the IoU, as the two are linked by the relationship:

That relationship also means that is always verified.

Like the IoU, we can define it in terms of the confusion matrix values:

Which shows that it simply corresponds to a “per-pixel” definition of the F1-score.

4.1.4.2 Distance metrics

For Hausdorff’’s distance (HD), only the contours and are considered. First, the Euclidean distances from all points in to the closest point in are computed, and the maximum value is taken:

Then, the same thing is done starting from all the points in :

Hausdorff’s distance is then defined as the maximum of those two values:

This process is illustrated in Figure 4.6.

As the HD is extremely sensitive to outliers, it is also sometimes preferred to slightly relax the “maximum,” and to replace it with a percentile :

With being the most frequent choice.

While a HD of 0 corresponds to a perfect segmentation, its maximum value is unbounded. This makes it a particularly tricky metric to use in aggregates, as it is difficult to assign a value, for instance, to a “missing prediction” (i.e. an image where ).

While less commonly used, some other distance-based metrics exist. Reinke et al.’s review [40] notably mention the Average Symmetric Surface Distance (ASSD) and the Normalized Surface Distance (NSD).

The ASSD is defined as:

For each point of the contour, the distance to the other contour is computed. The final value is the average distance computed from both contours. Compared to the HD, it will tend to penalize more a segmentation that is consistently wrong by a small amount than a segmentation that is almost perfect but with a few large errors.

The NSD, meanwhile, is a metric that takes into account the uncertainty of the annotations by first defining “boundary regions” and , which are the set of all pixels within a certain tolerance distance of the contours and :

The NSD is then defined as:

This same idea of a “tolerance” could easily be applied to the HD as well by redefining the and as:

So that the “uncertainty-aware” is given by:

4.1.4.3 Segmentation as binary classification

The IoU and DSC metrics, in their “confusion matrix” definitions, are essentially “pixel detection” metrics, which do not take into account the “true negatives” and consider that there is a “positive” class, the foreground, and a “negative” class, the background. But once we have established the confusion matrix, it is also possible to treat the problem as a “binary classification” problem, including the true negatives. The classification metrics defined in 4.1.3 can therefore also be used. The ACC or the MCC, for instance, can easily be computed.

The main difference of this approach is that it does not consider that the “foreground class” is inherently more important than the “background class,” and that the metric should reflect that correctly identifying that a pixel is in the background is just as important as identifying one in the foreground.

A key benefit is that it becomes trivial to extend the metrics to the semantic segmentation case, as adding new classes just means adding new rows and columns to the confusion matrix, but the formula for computing the metrics remains unchanged.

The main drawback of this approach is that, when the segmentation metric is computed on patches extracted from a larger image (for instance, comparing just the masks of a detected object and its matching ground truth object), the result of the metrics becomes highly dependent on the size of the patches, and the amount of background included in it.

Binary segmentation problems are indeed typically not really “two classes” problems, but rather “one class” class problems where we detect one class and put all others in the same bin. The “per-pixel binary classification” approach would therefore only make sense if the problem can actually be defined as a two classes problem.

4.1.5 Metrics for combined tasks

The most common approach for the evaluation of combined tasks (instance segmentation, semantic segmentation, instance classification, instance segmentation and classification) is to combine “basic” metrics to create a new one which attempts to summarize all aspects of the task into a single score.

The Gleason 2019 challenge, for instance, combines a classification with a micro-averaged and a macro-averaged segmentation F1-Score into a score . The SNI challenges, meanwhile, combine a simple “binary” DSC (on the whole image and not on separate instances) with a sort of micro-averaged DSC where the numerator and denominator of the DSC are separately aggregated over the set of matching instances. A similar idea is used in the MoNuSeg challenge with the “Aggregated Jaccard Index” (AJI), where the Intersection and the Union are aggregated over the matches.

For the most complex task of instance segmentation and classification, the “Panoptic Quality” (PQ), originally proposed by Kirillov et al. [27] for natural scenes, has recently been introduced to digital pathology by Graham et al. [20].

The PQ considers each class separately. For each class , is the set of ground truth instances in the class. Given a set of corresponding class predictions , the is computed in two steps.

First, the matches between ground truth instances and predicted instances are found (using the matching threshold). Using this strict matching rule, each segmented instance in and can be counted as TP, FP or FN.

Then, the of the class in the image is computed as:

Which can be decomposed into:

With the “Recognition Quality,” corresponding to the per-object F1-score of the class , and the “Segmentation Quality,” corresponding to the average IoU of the matching pairs of ground truth and predicted instances. The is also often referred to as the Detection Quality, and therefore noted as [43], [20].

4.1.6 Aggregation methods

A critical step to arrive at a final “score” for an algorithm on set of test samples is the aggregation of the per-sample metric(s). Let us therefore go back to the hierarchical representation of digital pathology datasets that we used at the beginning of the chapter (see Figure 4.1).

The aggregation process can happen in different dimensions: across that hierarchical representation, across multiple classes, and across multiple metrics.

4.1.6.1 Hierarchical aggregation

The aggregation across the hierarchical aggregation has two aspects: where are the metrics computed, and how are they averaged?

Object-level metrics would typically be those used in instance segmentation (per-object IoU, HD…). In a “bottom-up” approach, all the object-level measures could be averaged over a patch, then the patch-level measures over a WSI, the WSI-level over a patient, and finally the patient-level measures over the whole dataset. The problem of this approach is that, if the patches are very dissimilar in their object distribution, it may lead to cases where a single error on a patch with few objects is penalized a lot more harshly than an error on a larger patch with a larger population of objects. A more robust approach will therefore generally be to rather aggregate the per-object metrics over a WSI or a patient. It is also possible to directly average the object-level metrics on the entire dataset, skipping intermediate levels entirely. In the digital pathology context, having a patient-level distribution of a metric is however often desirable for a clinically relevant analysis of the results of an algorithm, as the goal will generally be to be able to validate that the performance of an algorithm is good across a wide range of patients. It also makes it possible to use powerful statistical tests to compare different algorithms together, as patients can then be used as paired samples.

Patch-level metrics are very commonly used in all sorts of tasks. It could be a region segmentation metric like the IoU or DSC, an object detection metric like the F1-Score, or a classification metric like the ACC or the MCC. It is once again possible to directly average those results on the entire dataset, or to go through the patient-level first.

Another possibility for many of those metrics is to not compute the metric at the patch level, but to aggregate its base components over a patient or WSI. For instance, a confusion matrix can be built over multiple patches of a WSI, before computing the relevant classification or detection metrics on this aggregated confusion matrix. This reduces the risk of introducing biases from the patch selection and focuses the results on the object of interest of pathology studies: the patient. We therefore find here again the same choices of micro- and macro-averaging that were described in the classification metrics.

4.1.6.2 Multiclass aggregation

When multiple classes are present, detection and segmentation metrics are generally first computed independently for each class before being aggregated together. This aggregation can also happen at any point in the hierarchy of the dataset. For the PQ metric, for instance, some aggregate the at the image patch level as , then find the global [43], [20], [18], while others averages each separately over all patches as , then define the global [19] (with the number of images and the number of classes). As always, the micro- or the macro-averaging approaches can also be considered. In a micro-averaging approach, the metric must first be decomposed into its base components, which are aggregated separately before computing the metric. For most classification or detection metrics, the base components would be the elements of the confusion matrix. For combined metrics such as the PQ or some custom score, then a choice must be made as to what constitutes a “base component.” Practically, almost all publications and challenges use a macro-averaging approach.

4.1.6.3 Multi-metrics aggregation

Single metrics are not capable of fully representing the capabilities of a given model or algorithm. This is true even for simple tasks: as we have seen, different metrics have distinct behaviours and biases, and may therefore miss some important insights about a particular algorithm. A clear example would be the IoU and the HD for segmentation metrics, which give very different information about the same task. As noted in section 4.1.5, combined tasks are often evaluated by creating complex metrics that combine basic detection, classification and segmentation metrics in some ways. Another approach, however, is to compute and report those simple metrics independently, to provide more detailed information about the predictive performance. Computing multiple metrics, however, means that algorithms cannot necessarily be ranked based on a single score, thus making the overall ranking (and therefore the choice of the “best method”) more difficult to obtain.

A possible solution is to compute the ranks separately for the different metrics, then to combine them with, for instance, the “sum of ranks.” As we will discuss below in section 4.4.7, this approach comes with its own pitfalls and challenges.

4.2 Metrics in digital pathology challenges

We examine here the evaluation metrics used in the challenges previously presented in Chapter 3, to get a sense of the common choices made by challenge organizers when determining the evaluation process. We look here mainly at the “basic” metrics (i.e. pure detection, classification or segmentation), and we will note when those metrics are included as part of a more complex evaluation score.

4.2.1 Detection tasks

A summary of the evaluation metrics used in digital pathology detection challenges can be found in Table 4.3. Almost all challenges used the F1-Score as the primary metric for ranking the participants’ submissions. Some challenges reported the PRE and REC separately. The only “challenge” to use varying-threshold metrics is NuCLS 2021. This dataset, however, is presented more as a benchmark for future usage by researchers than as a challenge, despite being listed on the grand-challenge.org website. In the baseline results reported by Amgad et al. [3], the AP using a fixed matching threshold of 0.5 on the IoU, and the mAP using varying matching thresholds from 0.5 to 0.95 are used, so that both the confidence threshold and the IoU threshold are varying in the metric. DigestPath ranks three different metrics separately, then use the average rank as the overall rank for each competing team.

| Challenge | Target | Metric(s) |

|---|---|---|

| PR in HIMA 2010 | Centroblasts in follicular lymphoma. | REC, SPE2 |

| MITOS 2012 | Mitosis in breast cancer. | F1, PRE, REC |

| AMIDA 2013 | Mitosis in breast cancer. | F1, PRE, REC |

| MITOS-ATYPIA 2014 | Mitosis in breast cancer. | F1, PRE, REC |

| GlaS 2015 | Prostate glands | F1 |

| TUPAC 2016 | Mitosis in breast cancer. | F1 |

| LYON 2019 | Lymphocytes in breast, colon and prostate. | F1 |

| DigestPath 2019 | Signet ring cell carcinoma. | PRE, REC, FPs per normal region, FROC3. |

| MoNuSAC 2020 | Nuclei | F1 (as part of the PQ) |

| PAIP 2021 | Perineural invasion in multiple organs. | F1 |

| NuCLS 2021 | Nuclei in different organs. | AP@.54, mAP@.5:.95 |

| MIDOG 2021 | Mitosis in breast cancer. | F1 |

| Conic 2022 | Nuclei | F1 (as part of the PQ) |

:Table 4.3. Summary of the metric(s) used in digital pathology detection challenges. Bolded values are used for the final ranking.

4.2.2 Classification challenges

A summary of the metrics used in digital pathology classification challenges is presented in Table 4.4. Compared to the detection tasks, there is a lot more diversity in the choices made by challenge organisers. All of the multi-class tasks in these challenges (except NuCLS 2021) have some form of ordering present in their categories. For the binary classification tasks, many challenges are assessed with a positive-class only metric, as shown with CAMELYON 2016 and PatchCamelyon 2019 (AUROC of the metastasis class), DigestPath 2019 (AUROC of the malignant class), HeroHE 2019 (F1-Score, AUROC, SEN and PRE of the HER2-positive class) and PAIP 2020 (F1-Score of the MSI-High class). In the multi-class case, the TUPAC 2016 and PANDA 2020 evaluations use the weighted quadratic kappa, while in MITOS-ATYPIA 2014 a “penalty” system is used for errors of more than one class. In several challenges, however, this ordering is not taken into account at all. This is the case with the Brain Tumour DP 2014, BIOIMAGING 2015 and BACH 2018 challenges, where the simple accuracy is used, and for the C-NMC 2019 challenge, where a weighted macro-averaged is preferred.

| Challenge | Classes | Metric(s) |

|---|---|---|

| Brain Tumour DP 2014 | Low Grade Glioma / Gliobastoma | ACC |

| MITOS-ATYPIA-14 | Nuclear atypia score (1-3) | ACC with penalty5 |

| BIOIMAGING15 | Normal, benign, in situ, invasive | ACC6 |

| TUPAC16 | Proliferation score (1-3) | |

| CAMELYON16 | Metastasis / No metastasis | |

| BACH18 | Normal, benign, in situ, invasive | ACC |

| C-NMC19 | Normal, malignant | Weighted sF17 |

| Gleason 2019 | Gleason grades | (included in a custom score) |

| PatchCamelyon19 | Metastasis / No metastasis | |

| DigestPath19 | Benign / Malignant | |

| HeroHE20 | HER2 positive / negative | , , , |

| PANDA20 | Gleason group (1-5) | |

| PAIP20 | MSI-High / MSI-Low | |

| NuCLS21 | Different types of nuclei |

These classification tasks make the boundaries between detection, classification and regression tasks sometimes difficult to determine. In HeroHE 2020, for instance, the ranking of the algorithm is based on the “F1-Score of the positive class,” which is a typical detection metric, but the AUROC of the positive class is also computed, which takes the “true negatives” into account and is typically a classification metric. The tasks that are explicitly about predicting a “score” all attempt to incorporate the notion of “distance” to the ground truth into their metric, which brings them closer to a regression task. The use of the quadratic kappa is also clearly related to its popularity in pathology and medical sciences in general, as it makes the results more relatable for medical experts. The danger of that particular metric, however, is that it may give a false sense of “interpretability,” as the number of classes and the target class distribution have a large effect on the perceived performance of an algorithm using that metric.

4.2.3 Segmentation challenges

Almost all segmentation challenge use overlap-based metrics (either the IoU or the DSC) as their main metric for ranking participating algorithms, as shown in Table 4.5. The only challenge to really use a distance-based metric as part of the ranking was the GlaS 2015 challenge, which ranked the per-object average HD and the per-object average DSC (as well as the detection F1 score) separately. PAIP 2021 used the HD as a matching criterion but did not use it for the ranking.

The BACH 2018 challenge is an outlier in this, as they use a custom score that loosely corresponds to a “per-pixel” accuracy, with ordered classes so that “larger” errors are penalized more.

Table 4.5. Summary of the metric(s) used in digital pathology segmentation challenges.

| Challenge | Target | Metric(s) |

|---|---|---|

| PR in HIMA 10 | Lymphocytes | DSC, IoU, HD, MAD |

| Brain Tumour DP 14 | Necrosis region | DSC |

| GlaS 2015 | Prostate glands | DSC, HD |

| SNI 15-18 | Nuclei | DSC8 |

| MoNuSeg 18 | Nuclei | IoU9 |

| BACH 18 | Benign / in situ / invasive cancer regions | Custom score |

| Gleason 19 | Gleason patterns | DSC10 (included in a custom score) |

| ACDC@LungHP 19 | Lung carcinoma | DSC |

| PAIP 19 | Tumour region | IoU |

| DigestPath 19 | Malignant glands | DSC |

| BCSS 19 | Tumour / stroma / inflammatory / necrosis / other tissue segmentation. | DSC |

| MoNuSAC 20 | Nuclei (epithelial / lymphocyte / neutrophil / macrophage) | IoU (as part of the PQ) |

| PAIP 20 | Tumour region | IoU |

| SegPC 21 | Multiple myeloma plasma cells | IoU |

| PAIP 21 | Perineural invasion | HD |

| NuCLS 21 | Nuclei (many classes) | IoU, DSC |

| WSSS4LUAD 21 | Tumour / stroma / normal tissue | IoU |

| CoNIC 22 | Nuclei (epithelial / lymphocyte / plasma / eosinophil / neutrophil / connective tissue) | IoU (as part of the PQ) |

4.3 State-of-the-art of the analyses of metrics

There is a growing amount of literature on the topic of evaluation metrics, within the context of biomedical imaging or in general. Reinke et al. [40] thoroughly examine the pitfalls and limitations of common image analysis metrics in the context of biomedical imaging. Luque et al. [32] examine the impact of class imbalance in binary classification metrics. Their methods and conclusions will be explored more deeply in section 4.3.3, and we extend their analysis in section 4.4.2. Chicco et al. [7] compare the MCC to several other binary classification metrics and find it generally more reliable. Delgado et al. [9] describes the limitations of Cohen’s kappa compared to the Matthews correlation coefficient, and some of their results are discussed below in section 4.3.2. Grandini et al [21] propose an overview of multi-class classification metrics, a topic generally less explored in the literature. Padilla et al. [38], [39] compare object detection metrics and note the importance of precisely defining the metrics used, as the AP, for instance, which can be computed in several ways.

Oksuz et al. [37] focus on object detection imbalance problems. They review the different sampling methods that can help to counteract the foreground-background imbalance to train machine learning algorithms. They identify four classes of such methods: hard sampling (where a subset of positive and negative examples with desired proportions is selected from the whole set), soft sampling (where the samples are weighted so that the background class samples contribution to the loss is diminished), sampling-free (where the architecture of the network, or the pipeline, are adapted to address the imbalance directly in the training with no particular sampling heuristic), and generative (where data augmentation is used also to correct the imbalance by increasing the minority class samples). In section 4.4.1, we also explore the effect of foreground-background imbalance on the evaluation process.

4.3.1 Relations between classification and detection metrics

Many evaluation metrics are related or take similar forms. The most obvious relationship is between the different forms of the F1-Score, which can be used as a detection measure, a classification measure, and a segmentation measure (as the DSC).

A somewhat less obvious relation exists between the F1-Score and unweighted Cohen’s kappa, as identified by Zijdenbos et al. [45]. In a binary classification where we consider a “positive” and a “negative” class, we have the confusion matrix:

And resolves to:

In a detection problem, TN is uncountable, but it can generally be assumed to verify:

With this assumption, for detection can be simplified to:

Which is the harmonic mean of the PRE and REC, i.e. the detection F1-Score.

Meanwhile, the same exercise applied to the MCC leads us to a “detection” version of:

Which is the geometric mean of the PRE and REC (note that this is different from the classification GM we used before, which was the geometric mean of the REC and SPE, not REC and PRE).

While the harmonic mean is typically the preferred averaging methods for ratios (such as the PRE and REC), the geometric mean will penalize less harshly small values for one of the averaged elements. For instance, for and , the geometric mean will still be equal to 0.3 while the harmonic mean will drop to 0.18.

4.3.2 Interpretation and problems of Cohen’s kappa

Cohen’s kappa is very popular in digital pathology. It is often associated to an “interpretation” scale such as this one [1]:

< 0: less disagreement than random chance.

0.00-0.20: slight agreement

0.21-0.40: fair agreement

0.41-0.60: moderate agreement

0.61-0.80: substantial agreement

0.81-1.00: almost perfect agreement

There is however some debate on the validity of these interpretation, with some authors suggesting that, for medical research in particular, a less optimistic view of the value should be used [34]. It should also be noted that the kappa values are highly dependent on the weights used, and that this interpretation can therefore be highly misleading if a weighted kappa is used. For instance, in McLean et al.’s study of interobserver agreement in Gleason scoring [35], looking at the unweighted kappa would lead to an interpretation of “slight agreement,” the linear kappa to “slight to fair agreement,” and the quadratic kappa to “fair to moderate agreement.”

This can be problematic when, for instance, Humphrey et al. [22] compare the average unweighted kappa of 0.435 of general pathologists on Gleason scoring from a study [2] with the 0.6-0.7 weighted kappa11 for urologic pathologists from another [1] to find an “enhanced interobserver agreement” for the specialists, instead of using the 0.47-0.64 unweighted kappa which was also reported in the same study, marking an improvement that is still very substantial but not quite as extreme as Humphrey’s paper suggests.

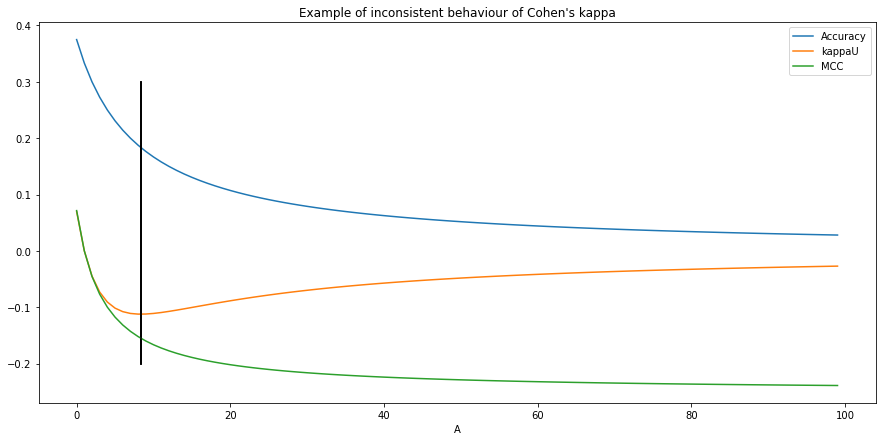

As noted by Delgado et al. [9], in some circumstances Cohen’s kappa can have an inconsistent behaviour, where a worse agreement leads to better results. In general, this can be demonstrated using the family of imbalanced confusion matrices, defined by:

So that everywhere except for , with . Intuitively, increasing means that at the same time we increase the imbalance, and we reduce the agreement. While we would expect in that case to be monotically decreasing, Delgado et al. show that it is not the case, and that after the inflexion point at the values start to increase. This can be verified experimentally, as we can see in Figure 4.7.

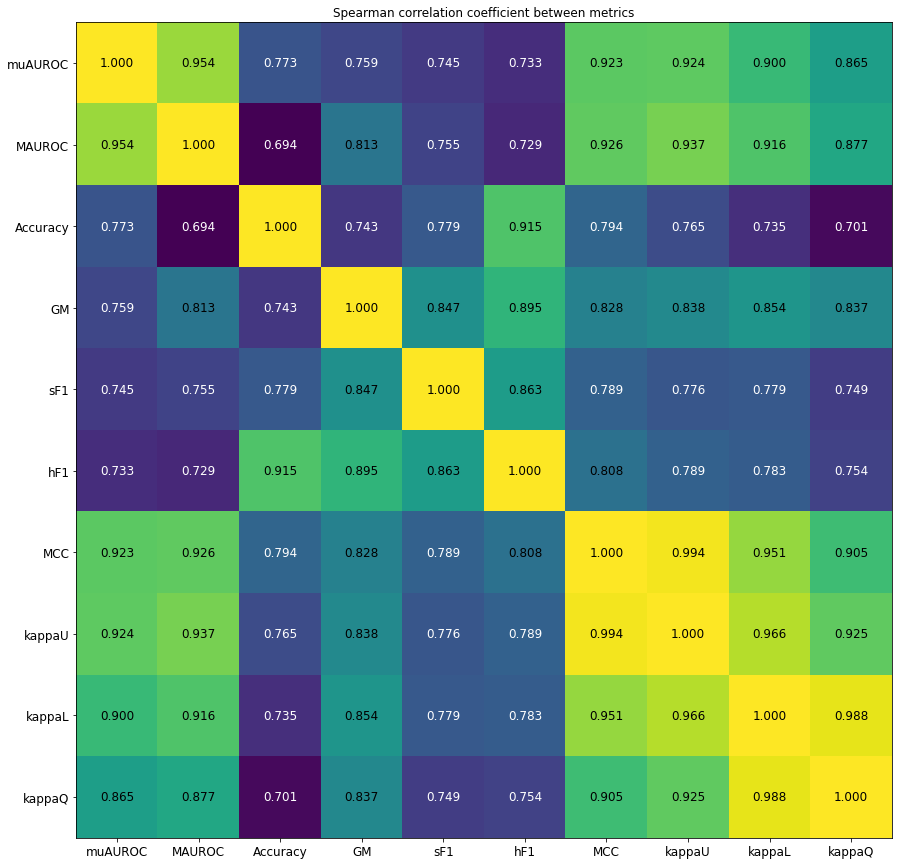

However, this is unlikely to happen, and in practice the in general agrees well with the MCC, as shown in our experiments in section 4.4.5.

4.3.3 Imbalanced datasets in classification tasks

The question of how classification metrics behave with imbalanced datasets was systematically explored by Luque et al. [32] for binary classification. In this section, we will briefly explain their method and their results. In section 4.4.2, we will extend their analysis to some metrics that they did not include, and to multi-class problems.

To analyse the metrics’ behaviour, the first step is to parametrize the confusion matrix so that it becomes a function of the sensitivity of each class, and of the class imbalance. Let be the sensitivity of class and the proportion of the dataset that is of class , so that and . As it is a binary classification problem, Luque et al. further define one of the classes as the positive class, and the other as the negative class. We therefore have , and we can then define the balance parameter , so that corresponds to balanced data, to an “all-negative” dataset, and to an “all-positive” dataset. The binary confusion matrix can be formulated as:

With the total number of samples in the dataset.

The study then shows how different classification metrics behave for different values of . The bias of a metric due to class imbalance can be characterized by looking at the difference between the metric measured for with the same metric measured at (i.e. for the same per-class sensitivities in a balanced dataset). Figure 4.8 shows for instance how the ACC behaves with imbalanced datasets. The interpretation of the top row is that, as the balance skews towards one of the two classes, only the performance of that class influences the result of the metric, as the isocontours (shown at 0.1 intervals in the figures) become parallel to either the horizontal or the vertical axis. In the bottom row, we can visualise the bias that it introduces for medium () and very high () imbalance according to per-class sensitivities. By contrast, the unbiased behaviour of the GM is shown in Figure 4.9.

![Figure 4.8. Behaviour of the ACC in a binary classification task with different class imbalance. Top row: result of the metric with isocontours at 0.1 intervals. Bottom row: imbalance bias (difference with the metric at \delta = 0), with negative values in blue and positive values in red. For the accuracy, the range of the bias is [-0.49, 0.49].](./fig/4-8.png)

In Table 4.6, we reproduce the range of bias found in Luque et al. [32] for several classification metrics. It should be noted that, for the MCC, the metric is “normalized” with so that its value is limited to the same [0, 1] range as the other metrics, to make the comparisons more accurate.

| Metric | Range of bias |

|---|---|

| [-0.49, 0.49] | |

| [-0.86, 0.33] | |

| [0, 0] | |

| [-0.34, 0.34] |

A limitation of their study is that the F1-Score that they used is the , the “per-class” F1-score of the “positive” class. This is a very common choice in binary classification problems, but it makes the comparison with the , and slightly uneven, as the is a class-specific metric while the others are all global metrics. In fact, the here is not really a binary classification metric, but rather a detection metric, as it does not consider a “two classes” problem but rather a “one class-vs-all” problem.

4.3.4 Statistical tests

When looking at results from different algorithms on a given task, it can be very difficult to determine if the difference in metrics between methods can be considered “significant.” Part of the question is related to the various sources of uncertainty that come with the annotation process, and will be discussed more in the following chapters, such as interobserver variability and imperfect annotations. Even if we assume “perfect” annotations, however, it is still necessary to determine if a difference in results may be attributed simply to random sampling, or if we can safely reject that hypothesis.

Let’s consider datasets , which are sampled from a larger, potentially infinite population , and a performance metric computed on the output of an algorithm for the dataset . Ideally, the null hypothesis would be that the samples of for the algorithms that we want to compare come from the same distribution.

Practically, however, we generally have a single test dataset which we want to use for our comparison. Different statistical tests have been proposed to provide some confidence in the detection of significant differences in algorithms, depending on the situation. Dietterich [11], for instance, proposes to use the McNemar test for comparing the performances of two classifiers. McNemar’s test is based on the contingency table between two classifiers:

| = Number of examples misclassified by and | = Number of examples well classified by only |

| = Number of examples well classified bv only | = Number of examples well classified by and |

The statistic computed is:

It follows the distribution with one degree of freedom under the null hypothesis.

In general, however, the goal is to be able to compare multiple algorithms based on arbitrary evaluation metrics and the same test set. The recommended test for comparing multiple algorithms on the same data is the Friedman non-parametric test [10]–[31]. The Friedman test tells if the null hypothesis that all the tested and dependent samples (in this case the values of the metrics) come from the same distribution can be rejected. To determine the pairwise significance of the differences between algorithms, a post hoc test must be conducted. The Nemenyi post hoc is a recommended choice [10], although some suggest replacing the post hoc by pairwise Wilcoxon signed-rank tests [4]. The Friedman and Nemenyi tests were used in the statistical score we used in our experiments on imperfect annotations [13], [14] (see Chapter 5).

The Friedman test is the non-parametric version of the repeated-measures ANOVA [10]. If we consider a test set that can be divided into non-overlapping subsets (for instance, may contain all the patches from a patient in a patch classification task, or all the objects from a patch in a detection or instance segmentation task), so that a performance metric for the algorithms can be computed as .

First, the rank of each algorithm is computed on each subset: , where for the algorithm where . For each algorithm, the ranks average is then computed: . If all algorithms are equivalent, then their average ranks should be equal. The statistic used to test if this null hypothesis can be rejected is [10]:

Under the null hypothesis, this statistic follows a distribution with degrees of freedom. If the null hypothesis is rejected according to a p-value threshold (typically), the Nemenyi post hoc can be used to determine which algorithms are significantly different from each other. The Nemenyi test is based on the average rank difference, computed during the Friedman test, between pairs of algorithms. The ranks depend not only on the performance of the two algorithms being considered, but also on the performance of all the other algorithms compared in the Friedman test. This can be problematic, as it can lead to situations where two algorithms are considered to be significantly different if compared as part of one set of algorithms, and not significant if compared as part of another. For this reason, some recommend using the Wilcoxon signed-rank test instead of the Nemenyi for the pairwise analysis (adjusting the significance level to correct for the Type-I error, for instance by dividing the confidence level by the number of compared methods minus one) [10], [4].

Practically, this type of analysis is unfortunately rarely used when comparing deep learning algorithms. At best, challenges will sometimes provide box-plots of the distributions of results for the top teams (as for instance in the ACDC@LungHP 2019 [29] or in the PAIP 2019 [26] challenges), or 95% confidence intervals alongside the average results (as in MoNuSeg 2018 [28]).

4.4 Experiments and original analyses

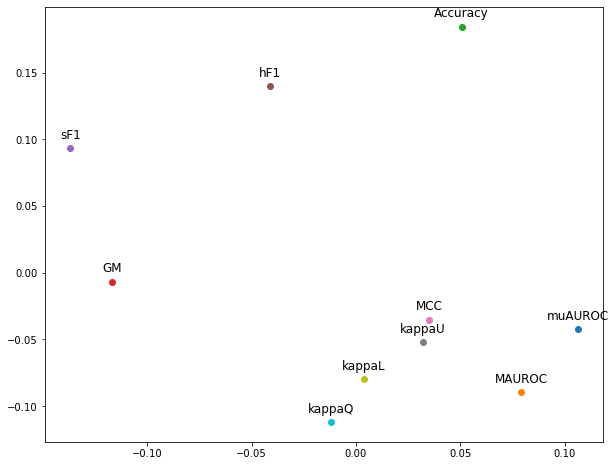

In this section, we present several experiments that we performed in order to extend these analyses, or to explore more thoroughly some of the most interesting aspects of the behaviour of the metrics. Specifically, we will look at the effects of different types of class imbalance (4.4.1, 4.4.2), the consequences of the fuzzy boundary between detection and classification tasks (4.4.3, 4.4.4), the agreement between the scores given by different classification metrics for the same set of predictions (4.4.5), the biases of common segmentation metrics (4.4.6), the difficulties in multi-metrics aggregation (4.4.7) and, finally, at why the Panoptic Quality should be avoided for instance segmentation and classification problems (4.4.8).

4.4.1 Foreground-background imbalance in detection metrics

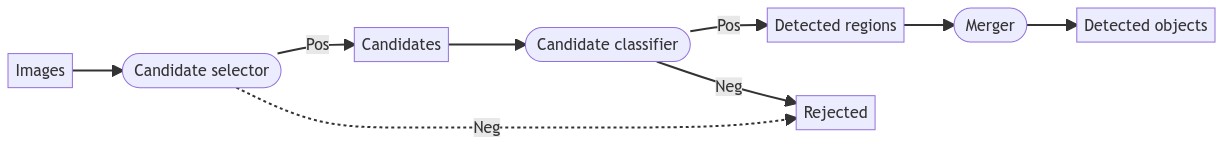

As detection tasks are “one class versus all” problems, they are often associated with a very large “foreground-background” imbalance, meaning that the objects of interest are sparsely distributed in the images. This is particularly true in the most popular detection task in digital pathology: mitosis detection.

In the MITOS12 mitosis detection challenge, for instance, the training dataset consists of 35 image patches, each with dimensions of 2048×2048 pixels. There are 226 mitoses in this training set, occupying a total mitosis area of around 135.000 pixels, which corresponds to about 0.09% of the whole area.

The detection process, illustrated in Figure 4.10, typically involves three main phases: first finding “candidate” regions with a “candidate selector,” then classifying each candidate as a positive or negative detection with a “candidate classifier,” then finally merging overlapping regions into detected object instances.

For the first phase, there are two main strategies: either create a very large number of candidates by densely sampling the space of possible bounding boxes, or create a restricted set of candidates, using the image features already in the first phase to reject “obvious” negatives. The main advantage of the first approach is that it generally ensures that no positive object is accidentally rejected in the first phase, and therefore not even presented to the candidate classifier. The main advantage of the second is that it makes the task of the candidate classifier a lot easier.

The overall performance of the whole detection pipeline, however, is not just affected by the performance of the selector, classifier and merger, but also, as in all machine learning tasks, by the selection of the possible data used for the evaluation. In the MITOS12 dataset, the 35 image patches correspond to small portions of 5 WSI, selected because of the presence of several mitosis. When the choice of regions of interest is not random, the distribution of positive & negatives changes with the size of the selected region. Taking a larger region “around” the mitosis that first attracted the attention of the pathologist is likely to increase the proportion of negatives, while taking a narrower region would increase the proportion of positives. Given the sparsity of the objects of interest, the effect can quickly become large.

To quickly simulate how this may affect the results of a detection pipeline, we define a detector with the following characteristics:

The “candidate selection” step filters out 99.99% of the possible candidates at the pixel level, assuming that we define the set of possible candidates as same-sized patches centred on each pixel of the image (so there are as many possible candidates before selection as there are pixels in the image). This assumes that most possible candidates will be trivially dismissed, which is not unrealistic in a mitosis detection problem, as all pixels that are relatively lighter are certain not to be part of a mitosis (see Figure 4.11).

The “candidate classifier” step has a 99% specificity and 75% sensitivity rate.

We then look at three scenarios. In the first one, we take the characteristics of the MITOS12 dataset (226 mitosis occupying 0.09% of the total pixel area). In the second, we imagine a more restricted set where a smaller region of interest was considered. We look at what happens if, when restricting the total area by half, we keep about 75% of the mitosis. In the third scenario, we do the opposite and double the total area, and simulate that this includes 150% of the original amount of mitosis. The results presented in Table 4.7 show that, while the recall in all cases remains the same (as it is equal to the sensitivity that we fixed as a parameter of the pipeline), the precision varies as the foreground-background imbalance changes. As proportionally more negative examples are shown, the number of false positives can only increase and the number of true positives can only decrease, so the precision and F1-score can only get worse.

| Scenario | Precision | Recall | F1 |

|---|---|---|---|

| MITOS12 distribution | |||

| Smaller region | |||

| Larger region | |||

| MITOS-ATYPIA-14 distribution |

Of course, as long as methods are compared on exactly the same test set, a ranking of the methods based on the F1-Score should still be representative of their relative performance. However, the characteristics of the dataset are still important to keep in mind when interpreting the results, particularly when the same task is measured on different datasets. The MITOS-ATYPIA-14 dataset, for instance, has a much lower ratio of mitosis to total area, with mitosis representing 0.02% of the total area of the patches. A detection pipeline with the same behaviour as the one we simulated on MITOS12 would see its precision fall from 0.55 to 0.18, and its F1-score from 0.63 to 0.29 just by this change of distribution.

It is tempting to see that as the main explanation for the difference in results observed between the two challenges, as the top result for MITOS12 was a 0.78 F1-score, while it was 0.36 for MITOS-ATYPIA-14. There were, however, less participants in the mitosis detection part of the challenge in the 2014 version, and the MITOS12 challenge was also designed with a problematic train/test split, with patches extracted from the same WSI appearing in both parts of the dataset. The difference in the mitosis density is very likely, however, to be a contributing factor.

4.4.2 Imbalanced datasets in classification tasks

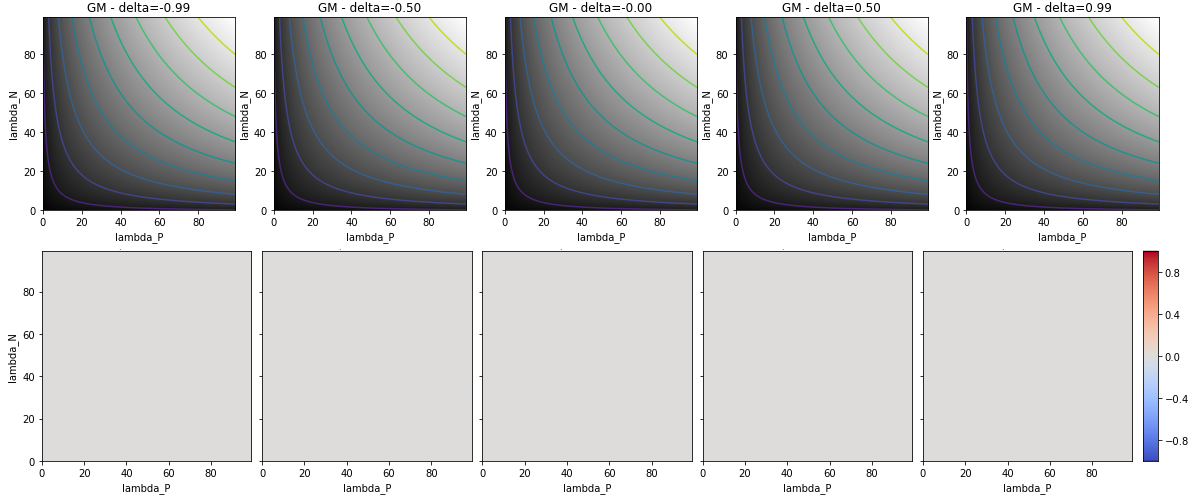

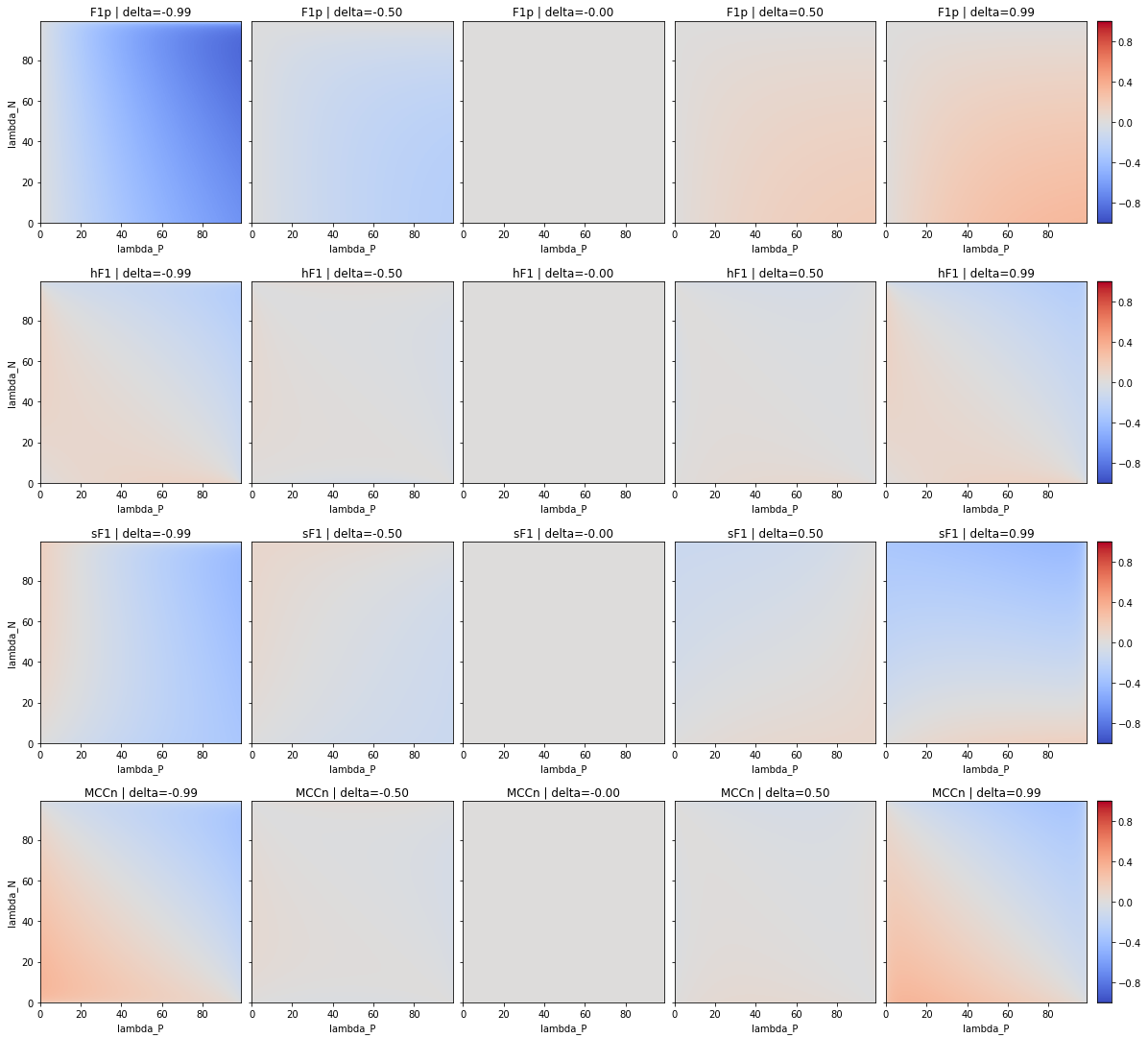

4.4.2.1 Extension to other metrics

As noted in section 4.3.3, the analysis of Luque et al. [32] on the effects of imbalanced datasets in classification tasks uses a “detection” F1-Score, which is not a global metric for a classification problem. We therefore reproduce the experiment with the two version of the macro-averaged F1-score, the and the . We also add the , normalized similarly as the (cf. section 4.3.3), for completeness’ sake. Our results are presented in Table 4.8, and a comparison between the biases of the , the , the and the is shown in Figure 4.12.

| Metric | Range of bias |

|---|---|

| [-0.43, 0.16] | |

| [-0.28, 0.14] | |

| [-0.41, 0.49] |

As we can see, the shape of the bias is very similar between the and the . While the is clearly extremely biased with imbalanced datasets and should generally be avoided as a binary classification metric, the use of a macro-averaged version of the metric reduces its bias quite significantly and puts it in the same range as the (see Table 4.6) for the , and even lower for the . The latter therefore appears to be more robust to class imbalance than the MCC.

4.4.2.2 Extension to multi-class problems

In a multi-class problem, the notion of a “positive” and “negative” class has to be removed. The generic parametrized confusion matrix is therefore, for classes:

Where is the total number of samples, is the proportion of class in the dataset, and is the proportion of class samples that were misclassified as class , so that and .

This leads to a parametric space with a very high dimensionality, that is quite complex to explore. For a 3-class problem, we would have two “balance” parameters and (which set ) and six “error distribution” parameters (which set , etc.). In general, for a class problem, we would have balance parameters and error distribution parameters.

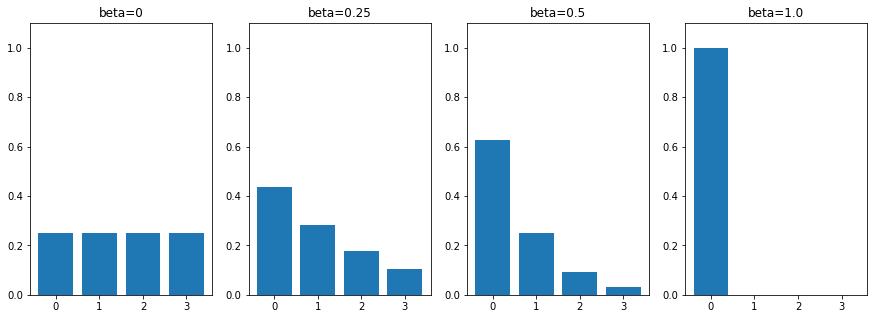

To reduce this dimensionality, we define a single balance parameter that will vary from 0 (balanced) to 1 (extremely imbalanced). We also define , and then recursively and . An example of the class distributions yielded by this method for a 4-class problem and different values is shown in Figure 4.13.

For the error distribution, we consider three scenarios that allow us to only have as parameters the class sensitivity values:

The evenly distributed error (ED) scenario, where we set .

The randomly distributed error (RD) scenario, where we first generate a random “error likelihood” matrix so that and , and the are then simply computed as . The error matrix needs to be computed first so that the same “error likelihood” is used when exploring the space of values.

The majority bias error (MB) scenario, where the errors are distributed more towards the majority class, so that .

The three scenarios are illustrated in Figure 4.14. The biases related to the class imbalance are reported in Table 4.9 for and , in the three error distribution scenarios. The imbalance bias for the and the increases as the number of classes increases. For the , the main effect seems to be that the negative bias (i.e. the imbalanced result is worse than the balanced result at the same sensitivity values) becomes more important than the positive bias, so the in general will be lower in a multi-class, imbalanced dataset. The keeps a relatively constant bias. The sees the range of its bias reduced, but only on the positive side. Finally, the GM remains unbiased.

| - | [-0.49, 0.49] | [0, 0] | [-0.34, 0.34] | [-0.28, 0.14] | [-0.43, 0.16] | [-0.41, 0.49] |

| ED | [-0.65, 0.65] | [0, 0] | [-0.36, 0.2] | [-0.42, 0.13] | [-0.42, 0.18] | [-0.42, 0.24] |

| RD | [-0.65, 0.65] | [0, 0] | [-0.37, 0.21] | [-0.43, 0.12] | [-0.42, 0.17] | [-0.42, 0.24] |

| MB | [-0.65, 0.65] | [0, 0] | [-0.37, 0.17] | [-0.42, 0.14] | [-0.44, 0.18] | [-0.42, 0.24] |

| ED | [-0.73, 0.73] | [0, 0] | [-0.38, 0.19] | [-0.52, 0.11] | [-0.41, 0.19] | [-0.43, 0.20] |

| RD | [-0.73, 0.73] | [0, 0] | [-0.38, 0.18] | [-0.52, 0.11] | [-0.41, 0.23] | [-0.43, 0.20] |

| MB | [-0.73, 0.73] | [0, 0] | [-0.45, 0.19] | [-0.52, 0.14] | [-0.45, 0.18] | [-0.43, 0.20] |

4.4.2.3 Normalized confusion matrix to counter class imbalance

To counteract the bias induced by class imbalance, the most obvious solution is to compute the metrics on the balanced (or normalized) confusion matrix (NCM). The NCM is computed by simply dividing each element by the sum of the values on the same row:

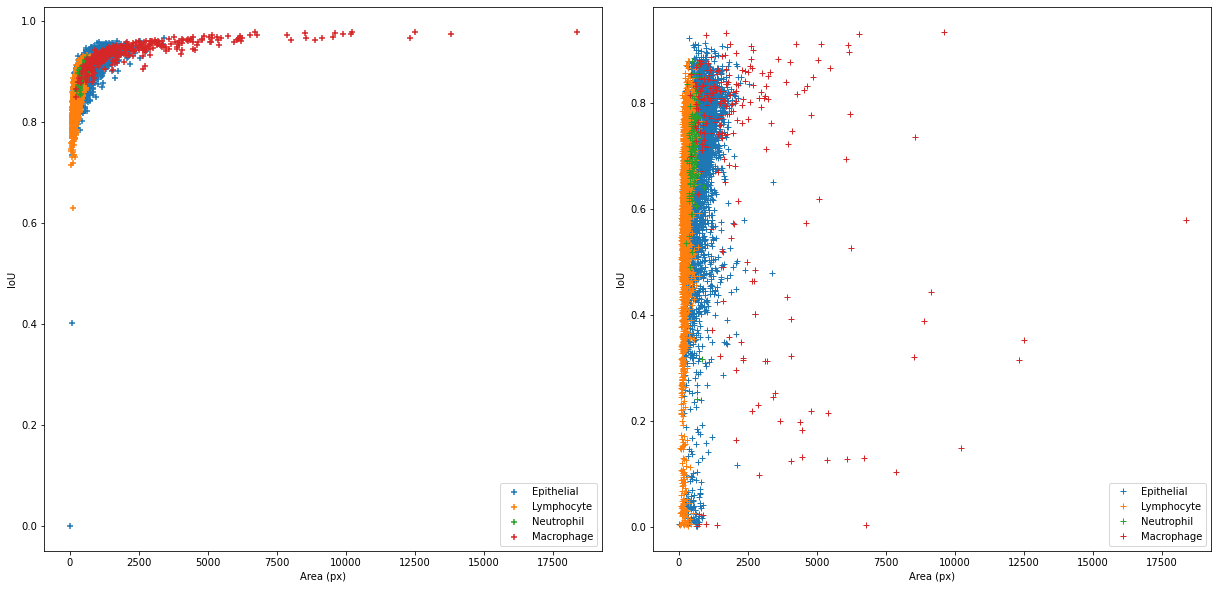

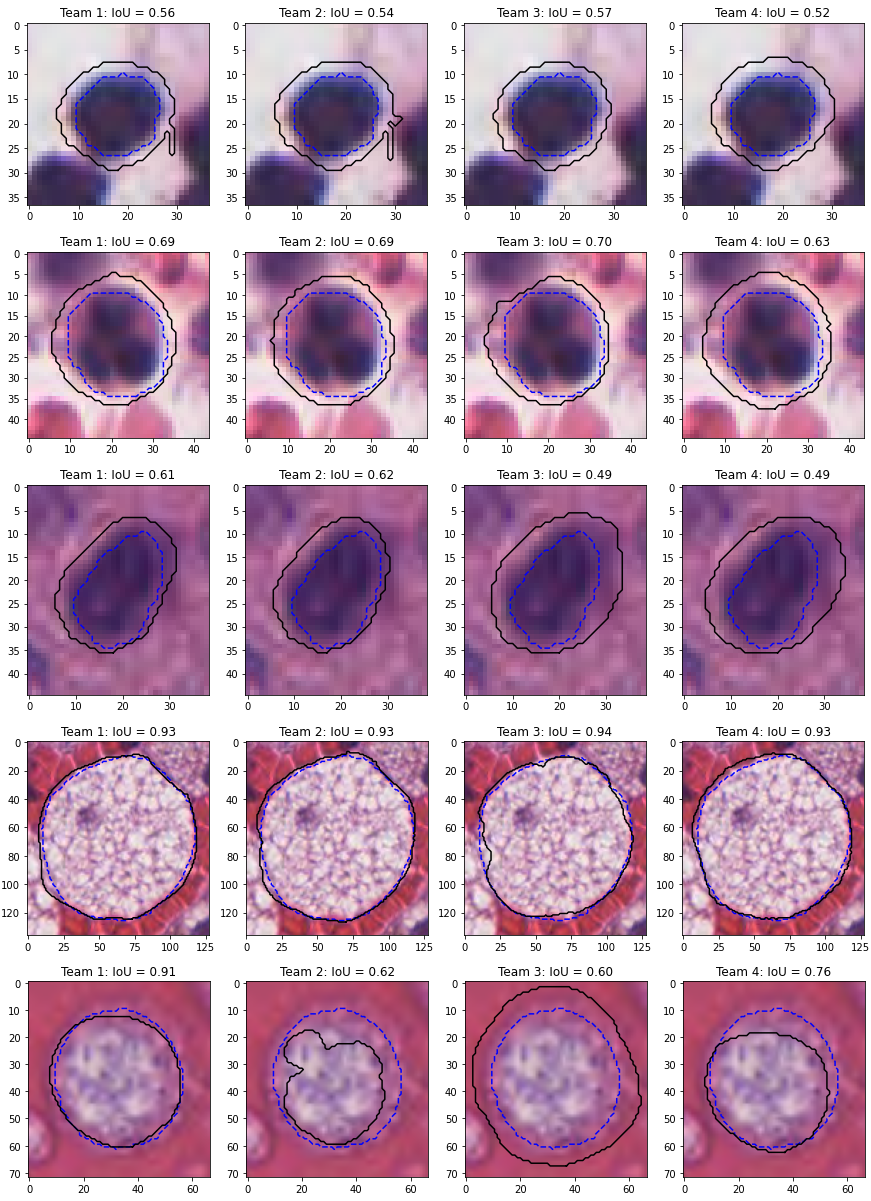

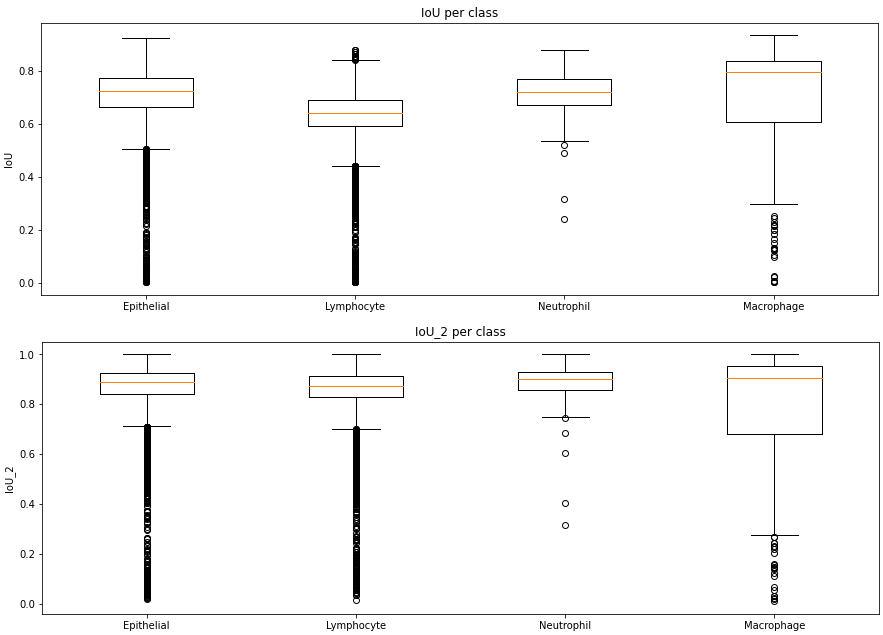

This acts as a “resampling” of the data so that each class has the same number of samples, while keeping the per-class sensitivity values intact. This solution, however, is not without its own drawbacks. To illustrate these, we need to use some real data. The results of the MoNuSAC 2020 challenge can be used in this case: as the prediction maps of four teams are available, it is possible to compute alternate metrics to those reported in the challenge results.

The MoNuSAC challenge is a nuclei instance segmentation and classification challenge. We will focus here on the classification part of the challenge, which has four classes (epithelial, neutrophil, lymphocyte and macrophage). From the published prediction maps, we can compute the classification confusion matrices (based on the matching pairs of detected nuclei, which will therefore vary between the teams), shown in Table 4.10, and the number of per-class TP, FP and FN for the detection task, shown in Table 4.11. A full description of the MoNuSAC dataset is given in Annex A.

| Team 1 | E | L | N | M | Team 2 | E | L | N | M |

|---|---|---|---|---|---|---|---|---|---|

| E | 6098 | 260 | 8 | 12 | E | 6240 | 88 | 0 | 57 |

| L | 79 | 7214 | 2 | 1 | L | 162 | 7131 | 4 | 6 |

| 6 | 5 | 39 | 118 | 2 | N | 1 | 18 | 133 | 10 |

| M | 16 | 11 | 8 | 170 | M | 16 | 1 | 11 | 164 |

| Team 3 | E | L | N | M | Team 4 | E | L | N | M |

| E | 5960 | 96 | 0 | 1 | E | 6193 | 302 | 2 | 22 |

| L | 76 | 6864 | 3 | 0 | L | 179 | 7274 | 1 | 0 |

| N | 1 | 20 | 137 | 3 | N | 3 | 38 | 117 | 2 |

| M | 30 | 8 | 11 | 159 | M | 30 | 7 | 25 | 155 |

| FP detections | E | L | N | M |

|---|---|---|---|---|

| Team 1 | 1338 | 829 | 14 | 59 |

| Team 2 | 962 | 629 | 5 | 285 |

| Team 3 | 2932 | 1545 | 50 | 99 |

| Team 4 | 1035 | 770 | 10 | 13 |

| FN detections | E | L | N | M |

| Team 1 | 831 | 507 | 8 | 102 |

| Team 2 | 824 | 500 | 10 | 115 |

| Team 3 | 1152 | 860 | 11 | 99 |

| Team 4 | 690 | 349 | 12 | 90 |

| TP detections | E | L | N | M |

| Team 1 | 6378 | 7296 | 164 | 205 |

| Team 2 | 6385 | 7303 | 162 | 192 |

| Team 3 | 6057 | 6942 | 161 | 208 |

| Team 4 | 6519 | 7454 | 160 | 217 |

From these results, we can look at the relationship between the values and the class imbalance. From Table 4.12, we can see that the two majority classes are associated to much higher sensitivity values than the two minority classes. If we are trying to judge how the algorithm would perform “in a world where the data is balanced,” this normalization may therefore not be completely accurate.

| Range | Epithelial | Lymphocyte | Neutrophil | Macrophage |

|---|---|---|---|---|

| Annotations | 0.47 | 0.50 | 0.01 | 0.02 |

| Predicted | [0.46 - 0.50] | [0.47 - 0.52] | [0.01 - 0.01] | [0.01 - 0.03] |

| Detection | [0.84 - 0.90] | [0.89 - 0.96] | [0.93 - 0.95] | [0.63 - 0.71] |

| Classif. | [0.95 - 0.98] | [0.98 - 0.99] | [0.72 - 0.85] | [0.71 - 0.85] |

Another possible problem that may arise from the normalization is that, if we have a very large imbalance with some very rare classes, we may artificially increase what is simply “sampling noise.” To illustrate this, we again turn to the MoNuSAC results. In this experiment, we keep all the properties of the dataset (class distribution of the ground truth) and of each team’s results (detection recall and per-class distribution of classification errors, represented by the ). We then randomly sample N nuclei from an “infinite” pool of samples that has the same class proportions as the ground truth and compute each team’s confusion matrix based on that sampling. On average, we will obtain confusion matrices that are the same as those of the challenge, but we will also be able to see the variation due to random sampling.

In Table 4.13, we report the results from 5.000 runs of the simulation based on 1.000, 5.000 or 15.000 samples (15.000 being close to the real number of annotated nuclei in the MoNuSAC test set). We show, for each classification metric, the maximum divergence observed across the four teams (, with the x percentile) as an uncertainty measure. As we can see, if we have many annotated samples like in MoNuSAC, the uncertainty due to the random sampling remains very low even in the NCM. If we have a smaller set of samples, however, this uncertainty can quickly grow, at least for the and . As the metric is unbiased relative to the imbalance, the results do not change between CM and NCM, but the random sampling uncertainty is very high. The also has a high uncertainty overall.

| 15.000 samples | ||||||

|---|---|---|---|---|---|---|

| CM | 0.001 | 0.003 | 0.001 | 0.006 | 0.001 | 0.001 |

| NCM | 0.003 | 0.003 | 0.002 | 0.003 | 0.002 | 0.002 |

| 5.000 samples | ||||||

| CM | 0.002 | 0.011 | 0.002 | 0.011 | 0.001 | 0.002 |

| NCM | 0.010 | 0.011 | 0.006 | 0.009 | 0.007 | 0.006 |

| 1.000 samples | ||||||

| CM | 0.006 | 0.056 | 0.005 | 0.046 | 0.003 | 0.005 |

| NCM | 0.052 | 0.056 | 0.035 | 0.054 | 0.035 | 0.034 |

Overall, the uncertainty added by using the NCM instead of the CM remains relatively low but, if the sample size is small, it is still in a range that could potentially affect the comparison between algorithms (as many biomedical datasets have much fewer than 1.000 samples available).

4.4.2.4 Conclusions on imbalanced datasets in classification tasks

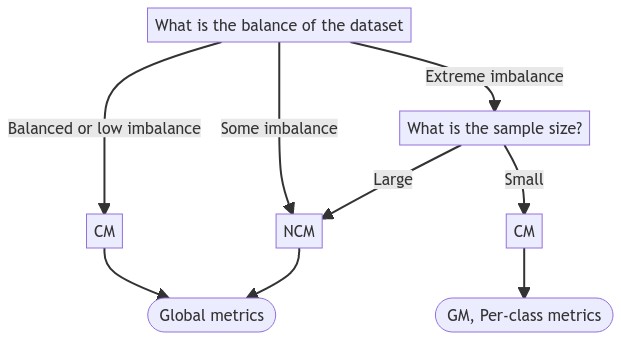

Given the above analysis, the flowchart presented in Figure 4.15 attempts to summarize how to decide which classification metrics to use based on the class imbalance of the dataset.

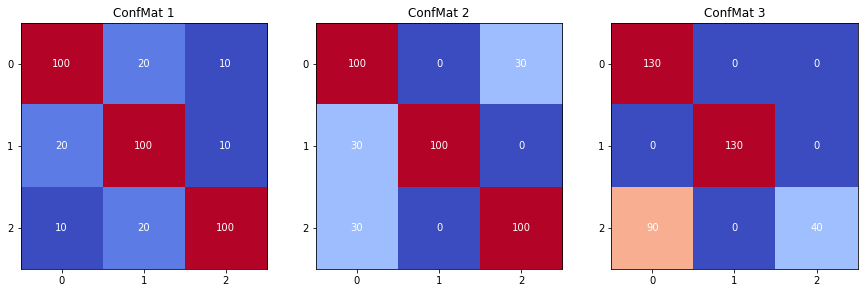

With mostly balanced datasets, the confusion matrix can be used directly to compute any global metrics. Which of these to use will mostly depend on whether the distribution of the errors among the classes is important for the evaluation or not. Figure 4.16 shows three confusion matrices corresponding to a balanced dataset with the same total error, but with different distributions per class. Table 4.14 shows the results of the different global metrics for these confusion matrices.

| \ | ||||||

|---|---|---|---|---|---|---|

| ConfMat 1 | 0.769 | 0.769 | 0.654 | 0.654 | 0.771 | 0.755 |

| ConfMat 2 | 0.769 | 0.769 | 0.654 | 0.660 | 0.783 | 0.780 |

| ConfMat 3 | 0.769 | 0.675 | 0.654 | 0.713 | 0.814 | 0.871 |

Obviously, the accuracy is the same for all three: it is completely independent from how the error is distributed, as long as the total error remains the same. Less obvious is the invariance of the unweighted kappa, which is expected to depend also on the distribution of the predicted classes, which here varies between the confusion matrices. With a perfectly balanced dataset, however, we can show that the is actually independent from the error distribution.

Using the original definition of the kappa:

It is clear that , which is the accuracy, will not vary. Meanwhile, we have for classes and samples:

And, in a balanced dataset:

That is, the sum of each row will be the same. Therefore:

And finally:

Which is illustrated in Table 4.14 with . If there is any class imbalance, this relationship no longer holds true.

The Geometric Mean is equal for the first two confusion matrices, for which the are equal whereas the errors in each class are distributed differently. The Matthews Correlation Coefficient and the two macro-averaged F1 scores, on the other hand, have different values for the three confusion matrices, and are therefore affected by changes in the distribution of errors. Whether this is a desired trait for the metric depends on the task and the evaluation criteria. It is interesting to note, however, that the latter three increase as the error gets progressively more concentrated in the three confusion matrices, even in the third one where all the prediction errors concern one class only. This contrasts greatly with the GM, which penalises the third confusion matrix a lot more, as the much smaller for class 2 will greatly affect the score.

If the dataset is imbalanced but with still a reasonable number of samples of each class (what is a reasonable amount will depend on how much sampling uncertainty is acceptable for the purpose of the evaluation of the task), using the normalized confusion matrix to reduce the problem to a balanced problem is a good solution, with the same choice of metrics available.

In cases of extreme imbalance with a small sample size, where there are very few samples available for the minority class, the normalization may induce too much sampling uncertainty. It is therefore probably advisable in those cases to focus on an unbiased global metric like the GM, or on class-specific metrics separately.

4.4.3 Limit between detection and classification

An interesting aspect of object detection tasks is their close relationship to binary classification tasks. The main difference, in the definition we have used for the task, is that an “object detection” task does not have a countable number of true negatives, but as we have seen a detection pipeline is often composed of a “candidate selection” step and a “classification” step.